Event based data loading

With the Real-Time Intelligence service we also have the Real-Time Hub. This service helps us with an overview of what is happening in the Fabric installation of streaming data, is an easy access to define new data streams and lastly to reach to events happening in the entire Azure tenant.

The Fabric Events part of the Real-Time Hub is where this blog post takes it’s leap, and will show you the possibilities we have with this service.

A hisotry on Synapse Analytics and Azure Data Factory

With Synapse Analytics and Azure Data Factory, we could get the pipeplines to execute based on events happening in a blob storage. Whenever a file was loaded to a blob storage, we could start a process of executing a specific pipeline.

The initial deployment of Fabric, did not have this kind of trigger for executing other elements in the stack.

With Fabric Events, this, and a lot more, is not possible.

The Fabric Events in the Real-Time Hub

The Fabric Events are found in the Real-Time Hub as the third element in the top list. Here you can see two different options of event names.

- Azure Blob Storage events

- Fabric Workspace item events

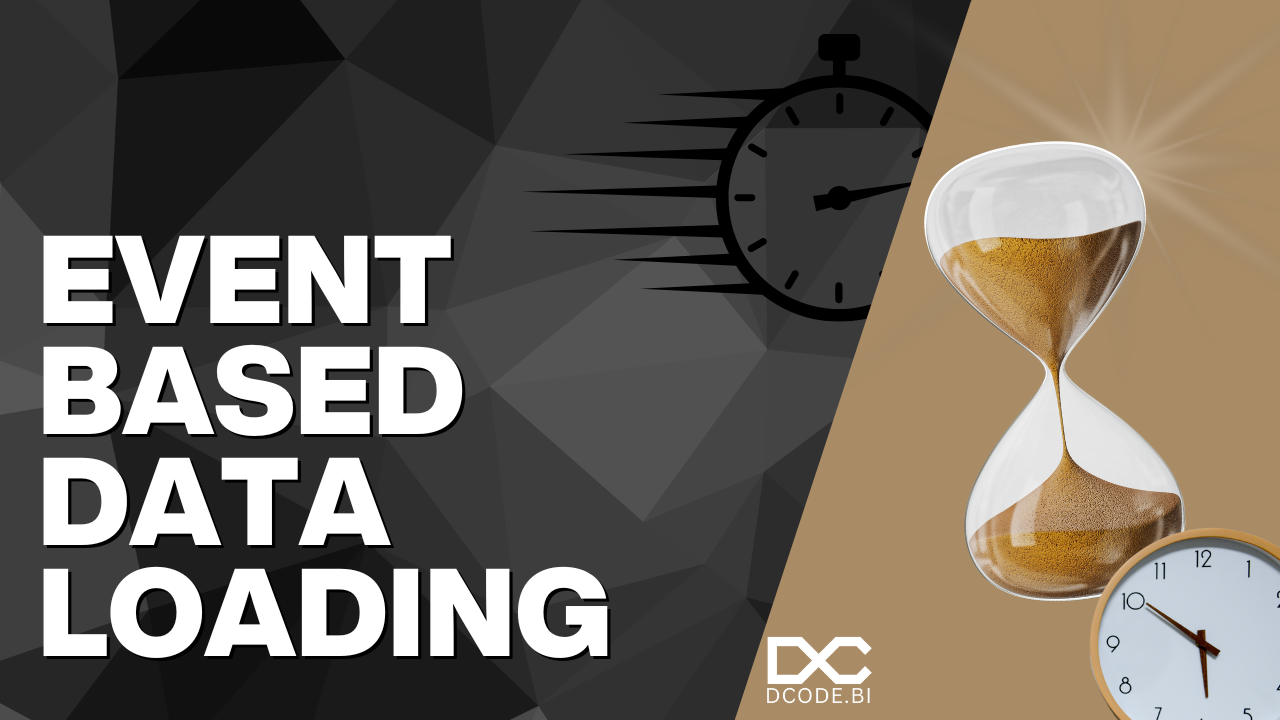

Azure Blob Storage events

This part is a “copy” of the functionality from the Synapse and ADS services - with a bit more. We can now react to way more events happening in a Blob Storage.

We can now react to new Blobs created, deleted and renamed. Along with events on change of the Storage Tier.

With the rest of the list we now have huge possibilites to react to events happening in an Azure Blob storage. And this is not just Azure Blob storages in a single Fabric subscription. We can listen to events happening in all subscriptions we have access to.

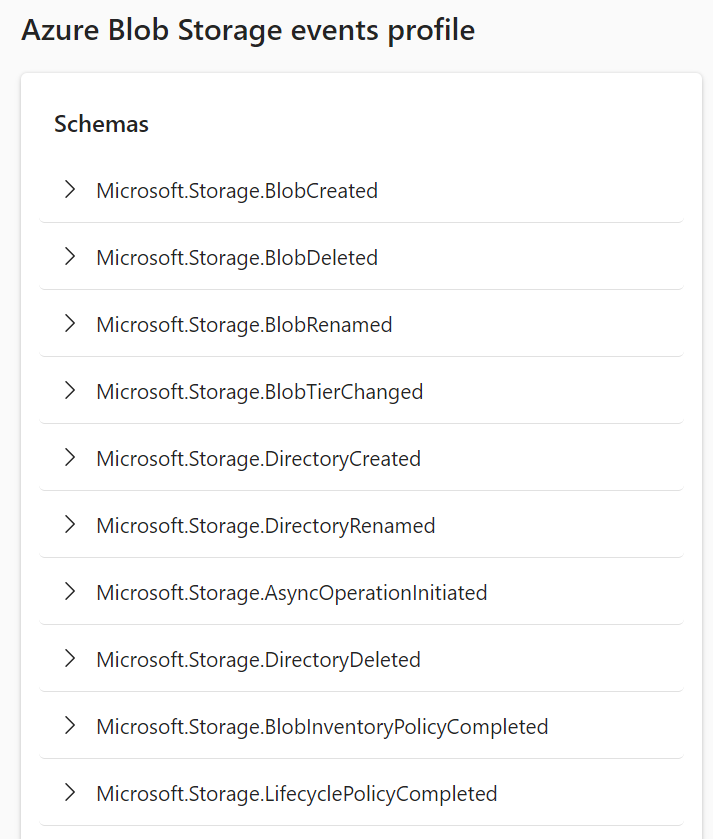

Fabric workspace item events

This is completely new to the events. We can now reach and create alerts based on what is happening in the Fabric tenant and from this, build a strong governance structure for alerts when something specific and of interest is happening.

For every item we can react to events happening - if a user is creating and item and that process fails, we can react to it. If a user tries to read an item which the user does not have access to, then an alert can be send.

Build the solution

It is quite easy to create alerts / start another process based on these items. You are guide through the entire setup, and the result is a Reflex item that needs to be saved in a workspace.

Start on the Real-Time Hub and find your way to the “Fabric events” area.

From here click the “Set Alert” green button and follow the guide

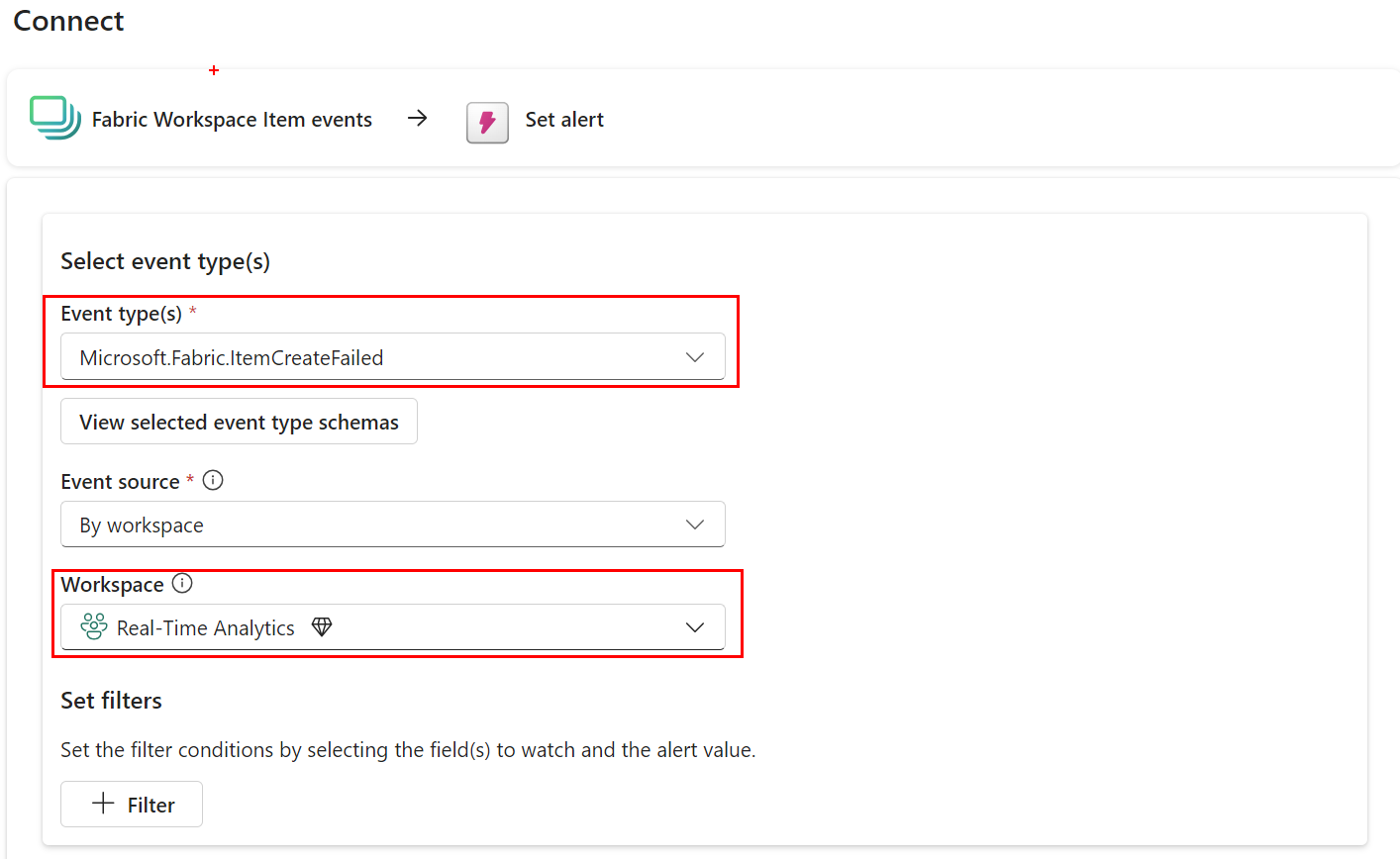

From here I’m selecting the Fabric workspace item events and the ItemCreateFailed event and the specific workspace I want to listen to.

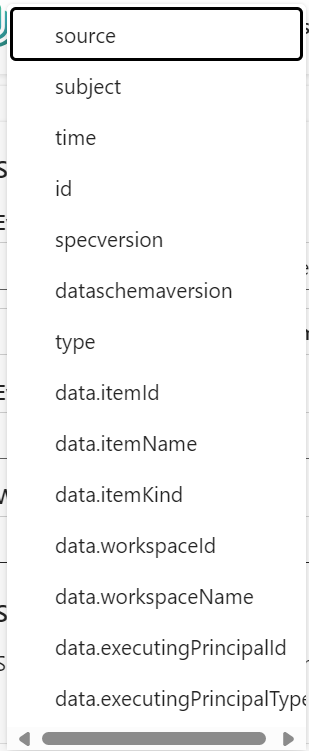

Next up is the filtering. Here I have possibilites to create filters on the events happening - I can choose between all the fields in the resultset…

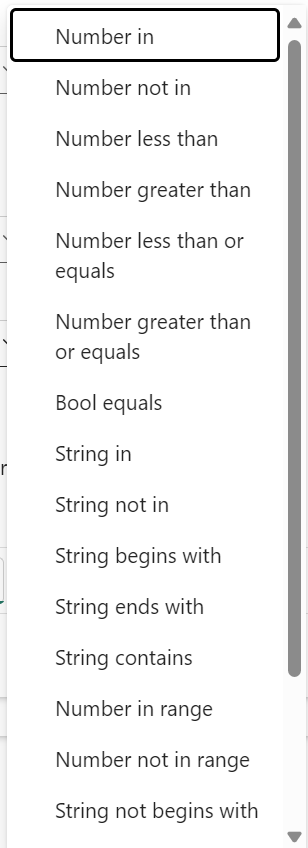

…and define my own filter statements.

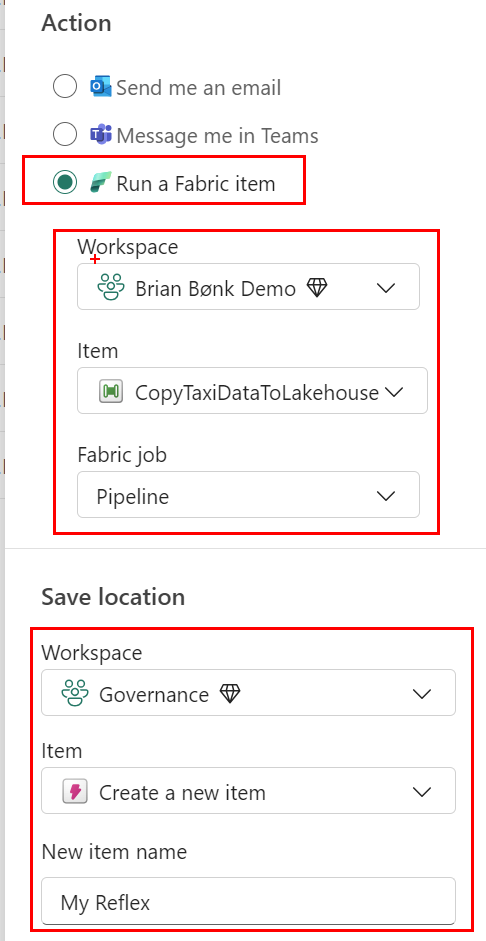

When I’m happy with the setup I can go to the next step adn configure the Action - here I want to execute another Fabric item.

Yes, I can execute other Fabric items based on events happening in the workspace. This could be a Notebook, a pipeline or something else. Just imagine the possibilities here.

The last thing is to define where the Reflex item is saved.

And that’s it.

Perspectives

With these few clicks of a mouse and a bit of naming of items, I’ve created an event based solution that executes a Fabric item based on an event happening in my Fabric installation.

I could see a vendor building some standard functionality on top of this, to give insights to the entire governance part of Fabric (not the things supported buy PurView though). What is happening in my Fabric installation, who is doing what, and how is the structure being build over time. This could also be used as an insight into the adoption of Fabric.

I hope you can see your own usage scenarios from this function and will try to implement it into your solutions.