Take Control of your data streams

With the release of the Real-Time Intelligence in Fabric read more here, we now have new services to work with and which can help us govern our data.

One of the main data elements in Fabric is streaming data - given by the Real-Time Intelligence services.

The new kid on the block is the Real-Time Hub. Found as a new left-menu item from all pages in Fabric:

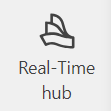

This service provides a coplete overview of all your existing streaming data (Data Streams), the possibilities yor have to make new ones (Microosft sources) and Event based triggers (Fabric Events).

Data Streams

Here you get a complete overview of everything going on in your Fabric enviroment around streaming data. You can see both Eventstreams and KQL Databases. With columns as Owner, Location, Endorsement and Sensitivity the overview so complete and gives you everything you need to govern and maintain your streaming data.

You can filter based on Stream Type, Owner, Item and Location - the last one (Location) is the name of the workspace where the item recides.

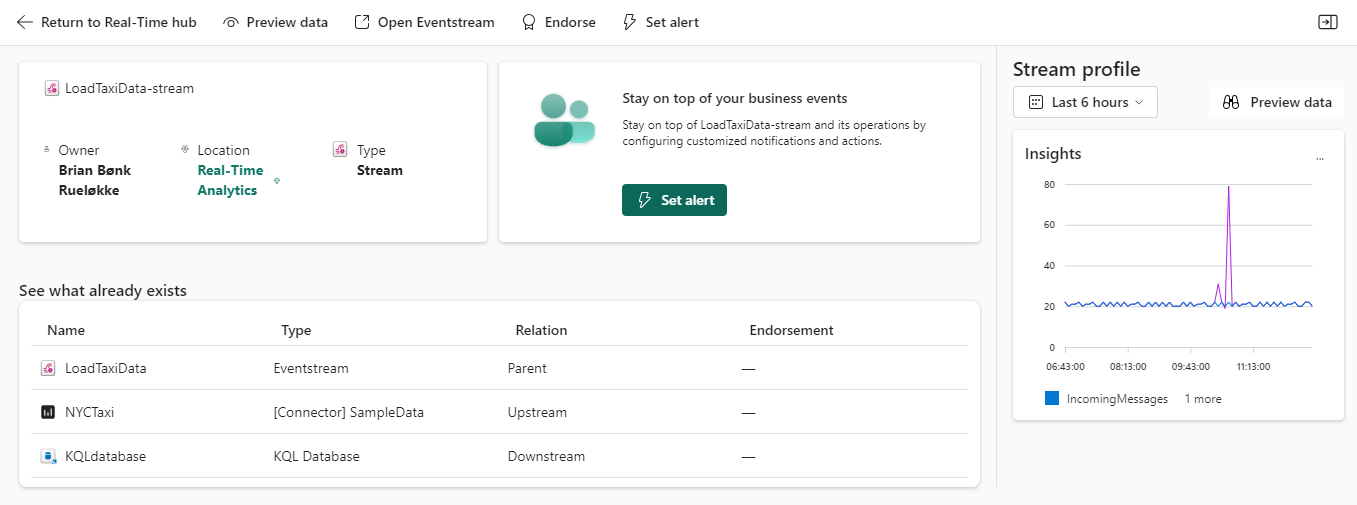

When clicking one of the elements in this list, you will be guided to the details of that item. The details shown will differ based on the item type.

If you click an existing Eventstream, you will see the elements around it - Parent Element, Upstream and Downstream elements.

At the top, you have quick access to Preview data, Open Eventstream, Endorse or set an Alert.

If anything is endorsed, you will see that marked in the column for that.

To the left, you get a glimpse of the Stream profile - what has happened in and out of that item in the last 6 hours - time filter can be changed to fit your needs.

Set Alert directly

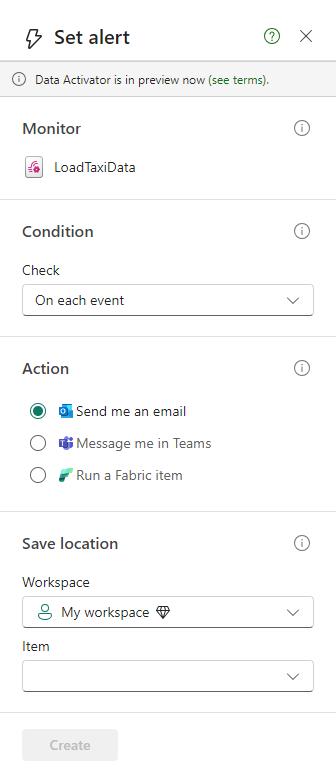

From this view of the Eventstream, I can quickly set an alert, by using the quick access menu bar at the top.

Clicking this menu item gives me a new window to the right in the browser, where I can configure and create a new Data Activator element with a few clicks.

The conditions can be chosen between 3 elements.

- On each event

- On each event When

- On each event Grouped by

The first is just plain, for each event happening in the Eventstream, do something.

The next two are with a bit more juice.

On each event When allows you to filter the incoming dataset and select when certain events happen with certain attribute values in a selected column, then do something.

On each event Grouped by allows you to group by a selected column AND filter on a column (the two does not have to be the same).

Around action, you can select to send an email a Teams message or the brand new thing to run a Fabric item. With a few clicks, you can get an Eventstream to execute a Fabric item based on the filters you want.

Lastly select the place where you want the Data Activator elemet to be saved - a so called Reflex. But please do not save it in your “My Workspace”.

Everything is guided and build for you, so you just have to do the cliks and create the alert as needed.

THAT’S EASY!

Microsoft sources

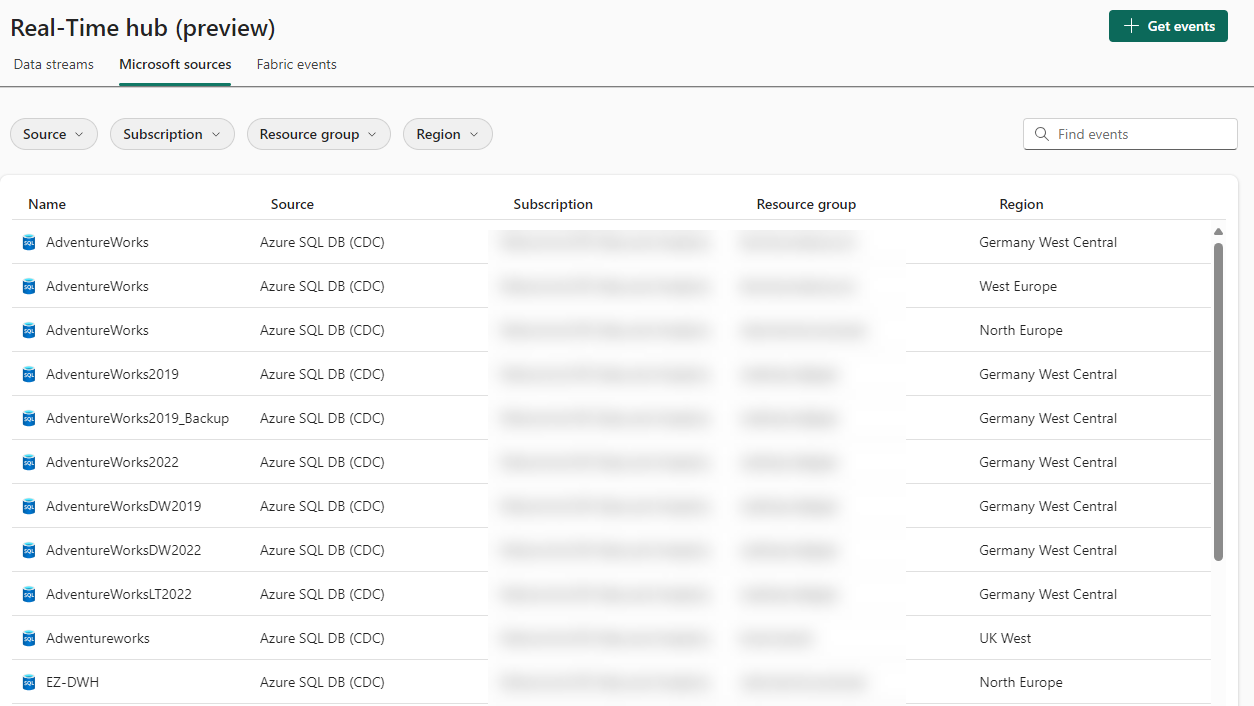

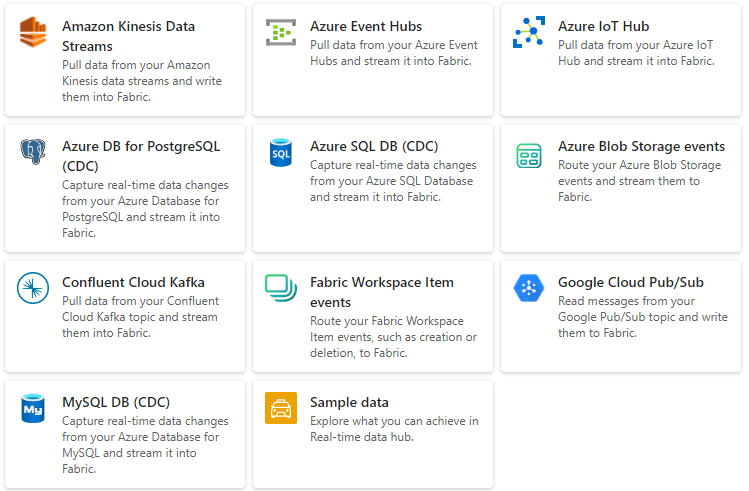

This part of the Real-Time Hub is the new entry for enalbing streaming sources to land in Fabric.

The list given to you are all the elements you have access to from Azure. So SQL Databases, Eventhubs, IoT hubs etc. All the Azure elements which can be enabled to stream data in near-real-time to Fabric. This is done with a few clicks and through a guided flow.

If you cannot find the needed item or need to create a new one, the button a the top left, guides you to create what you need.

Notice that we can also get streaming data from non-microsoft sources like Amazon Kinesis, Confluent Cloud Kafka and Google Cloud pub/sub sources.

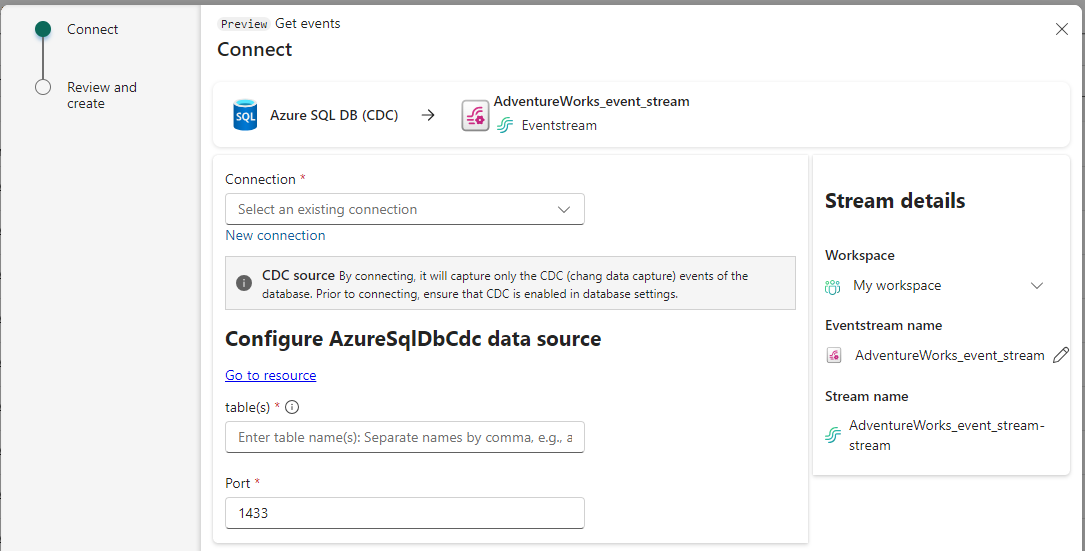

When ready to create the streams of data, simply click the item and follow the guide to configure your data stream. In below example it is a CDC stream from a SQL server.

Note that the default place to create elements is (unfortunately) in “My Workspace” - please change that to a dedicated Fabric workspace.

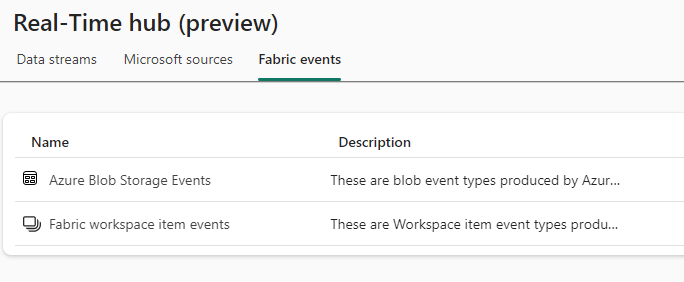

Fabric Events

The brand new and long waited for feature to do something, based on what is happening in Fabric.

Formerly in Synapse Analytics, we could start a pipeline when a file was landing in a storage account. Now with this release of Fabric Events, we can now to that, and way more…

For now the list for what to do with Azure Blob Storage Events is below - and I imagine this list will be much longer as time goes by. I would love to see more eventtypes to be selected and made available for a trigger to be fired.

- Blob Created

- Blob Deleted

- Blob Renamed

- Blob Tier Changed

- Directory Created

- Directory Renamed

- Directory Deleted

- Async Operation Initiated

- Blob Inventory Policy Completed

- Lifecycle Policy Completed

The list for Fabric workspace events is below - again I hope this list will be longer as time goes by.

- Item Create Succeeded

- Item Create Failed

- Item Update Succeeded

- Item Update Failed

- Item Delete Succeeded

- Item Delete Failed

- Item Read Succeedd

- Item Read Failed

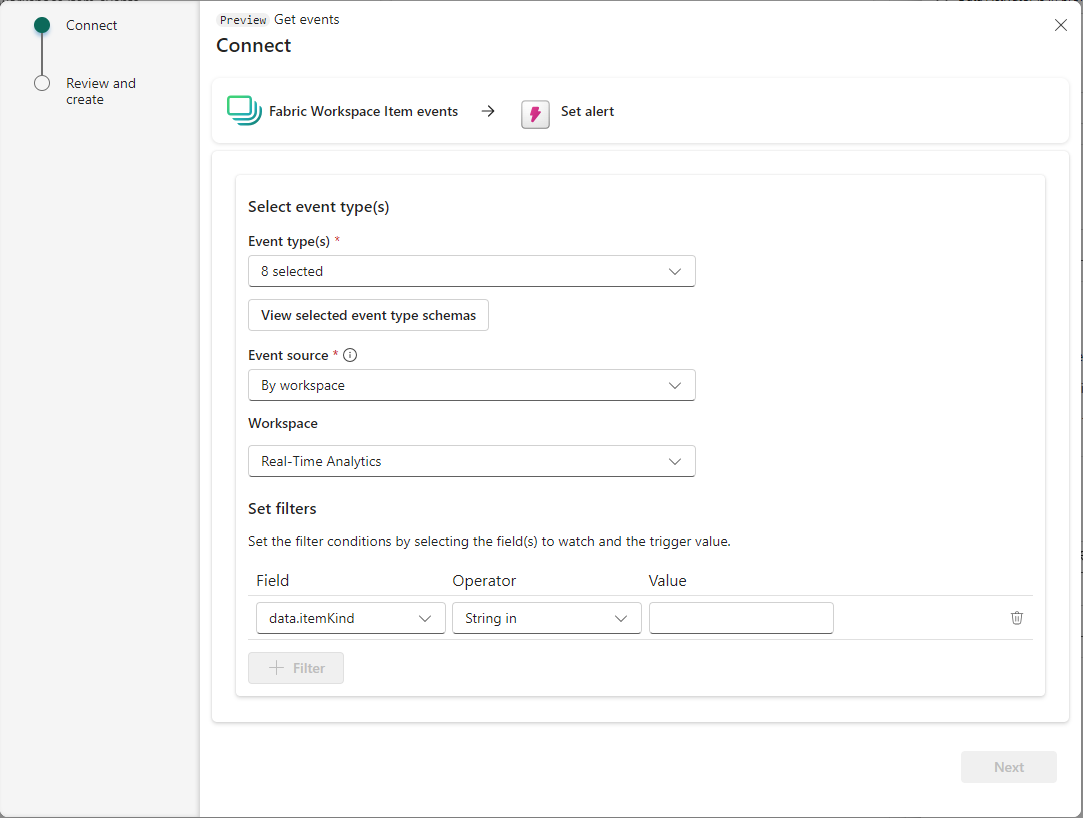

When configuring the Fabric Events, you are, again, guided to get stuff done.

BUT - I would wish for a bit more here. In the filter part of the configuration, perhaps I don’t know the possible outcome of a condition. Like below example the data.itemKind - when specific columns are selected, it would be nice to have a dropdown of options where applicaple.

All in all

A great new feature and addition to the overview of everything around streaming data and real-time intelligence.

The Real-Time Hub with Data Streams, Microsoft Sources and Fabric Events, we get capabilties like no-one could ever have imagined. And I also believe that Microsoft Fabric has something more which makes the distance to the competitors even longer.